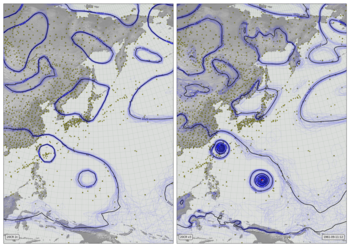

Galveston Hurricane (1900) video (spinup comparison)¶

The thin blue lines are mslp contours from each of 56 ensemble members (all members for v2c, the first 56 members for v3). The thicker black lines are contours of the ensemble mean. The yellow dots mark pressure observations assimilated while making the field shown. The red dots are the IBTRACS best-track observations for unnamed tropical storms - the southernmost is the Galveston Hurricane.

Data for this period are available from two 20CRv3 streams. The production stream starting in September 1894, and a subsequent stream starting in September 1899, which is imperfectly spun-up by the date of the hurricane. This is a comparison of the two streams.

Script to make an individual frame - takes year, month, day, and hour as command-line options:

#!/usr/bin/env python

# US region weather plot

# Compare pressures from 20CRV3 spinup and production

# Video version.

import os

import math

import datetime

import numpy

import pandas

import iris

import iris.analysis

import matplotlib

from matplotlib.backends.backend_agg import \

FigureCanvasAgg as FigureCanvas

from matplotlib.figure import Figure

import cartopy

import cartopy.crs as ccrs

import Meteorographica as mg

import IRData.twcr as twcr

# Get the datetime to plot from commandline arguments

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--year", help="Year",

type=int,required=True)

parser.add_argument("--month", help="Integer month",

type=int,required=True)

parser.add_argument("--day", help="Day of month",

type=int,required=True)

parser.add_argument("--hour", help="Time of day (0 to 23.99)",

type=float,required=True)

parser.add_argument("--opdir", help="Directory for output files",

default="%s/images/Galveston_Hurricane_sp" % \

os.getenv('SCRATCH'),

type=str,required=False)

args = parser.parse_args()

if not os.path.isdir(args.opdir):

os.makedirs(args.opdir)

dte=datetime.datetime(args.year,args.month,args.day,

int(args.hour),int(args.hour%1*60))

# HD video size 1920x1080

aspect=16.0/9.0

fig=Figure(figsize=(10.8*aspect,10.8), # Width, Height (inches)

dpi=100,

facecolor=(0.88,0.88,0.88,1),

edgecolor=None,

linewidth=0.0,

frameon=False,

subplotpars=None,

tight_layout=None)

canvas=FigureCanvas(fig)

# US-centred projection

projection=ccrs.RotatedPole(pole_longitude=110, pole_latitude=56)

scale=30

extent=[scale*-1*aspect/2,scale*aspect/2,scale*-1,scale]

# Two side-by-side plots

ax_2c=fig.add_axes([0.01,0.01,0.485,0.98],projection=projection)

ax_2c.set_axis_off()

ax_2c.set_extent(extent, crs=projection)

ax_3=fig.add_axes([0.505,0.01,0.485,0.98],projection=projection)

ax_3.set_axis_off()

ax_3.set_extent(extent, crs=projection)

# Background, grid and land for both

ax_2c.background_patch.set_facecolor((0.88,0.88,0.88,1))

ax_3.background_patch.set_facecolor((0.88,0.88,0.88,1))

mg.background.add_grid(ax_2c)

mg.background.add_grid(ax_3)

land_img_2c=ax_2c.background_img(name='GreyT', resolution='low')

land_img_3=ax_3.background_img(name='GreyT', resolution='low')

# Add the observations from spinup

obs=twcr.load_observations_fortime(dte,version='4.5.1.spinup')

mg.observations.plot(ax_2c,obs,radius=0.15)

# Highlight the Hurricane obs

obs_h=obs[obs.Name=='NOT NAMED']

if not obs_h.empty:

mg.observations.plot(ax_2c,obs_h,radius=0.25,facecolor='red',

zorder=100)

# load the spinup pressures

prmsl=twcr.load('prmsl',dte,version='4.5.1.spinup')

# Contour spaghetti plot of ensemble members

prmsl_r=prmsl.extract(iris.Constraint(member=list(range(0,56))))

mg.pressure.plot(ax_2c,prmsl_r,scale=0.01,type='spaghetti',

resolution=0.25,

levels=numpy.arange(870,1050,10),

colors='blue',

label=False,

linewidths=0.1)

# Add the ensemble mean - with labels

prmsl_m=prmsl.collapsed('member', iris.analysis.MEAN)

mg.pressure.plot(ax_2c,prmsl_m,scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,10),

colors='black',

label=False,

linewidths=2)

# 20CR2c label

mg.utils.plot_label(ax_2c,'20CR3 spinup',

facecolor=fig.get_facecolor(),

x_fraction=0.02,

horizontalalignment='left')

# V3 panel

# Add the observations from v3

obs=twcr.load_observations_fortime(dte,version='4.5.1')

mg.observations.plot(ax_3,obs,radius=0.15)

# Highlight the Hurricane obs

obs_h=obs[obs.Name=='NOT NAMED']

if not obs_h.empty:

mg.observations.plot(ax_3,obs_h,radius=0.25,facecolor='red',

zorder=100)

# load the V3 pressures

prmsl=twcr.load('prmsl',dte,version='4.5.1')

# Contour spaghetti plot of ensemble members

# Only use 56 members to match v2c

prmsl_r=prmsl.extract(iris.Constraint(member=list(range(0,56))))

mg.pressure.plot(ax_3,prmsl_r,scale=0.01,type='spaghetti',

resolution=0.25,

levels=numpy.arange(870,1050,10),

colors='blue',

label=False,

linewidths=0.1)

# Add the ensemble mean - with labels

prmsl_m=prmsl.collapsed('member', iris.analysis.MEAN)

mg.pressure.plot(ax_3,prmsl_m,scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,10),

colors='black',

label=False,

linewidths=2)

mg.utils.plot_label(ax_3,'20CR3 production',

facecolor=fig.get_facecolor(),

x_fraction=0.02,

horizontalalignment='left')

mg.utils.plot_label(ax_3,

('%04d-%02d-%02d:%02d' %

(args.year,args.month,args.day,args.hour)),

facecolor=fig.get_facecolor(),

x_fraction=0.98,

horizontalalignment='right')

# Output as png

fig.savefig('%s/V3vV2c_Galveston_sp_%04d%02d%02d%02d%02d.png' %

(args.opdir,args.year,args.month,args.day,

int(args.hour),int(args.hour%1*60)))

To make the video, it is necessary to run the script above hundreds of times - giving an image for every 15-minute period. The best way to do this is system dependent - the script below does it on the Met Office SPICE cluster - it will need modification to run on any other system. (Could do this on a single PC, but it will take many hours).

#!/usr/bin/env python

# Make all the individual frames for a movie

# run the jobs on SPICE.

import os

import subprocess

import datetime

max_jobs_in_queue=500

# Where to put the output files

opdir="%s/slurm_output" % os.getenv('SCRATCH')

if not os.path.isdir(opdir):

os.makedirs(opdir)

start_day=datetime.datetime(1900, 9, 1, 0)

end_day =datetime.datetime(1900, 9, 17, 23)

# Function to check if the job is already done for this timepoint

def is_done(year,month,day,hour):

op_file_name=("%s/images/Galveston_Hurricane_sp/" +

"V3vV2c_Galveston_sp_%04d%02d%02d%02d%02d.png") % (

os.getenv('SCRATCH'),

year,month,day,int(hour),

int(hour%1*60))

if os.path.isfile(op_file_name):

return True

return False

current_day=start_day

while current_day<=end_day:

queued_jobs=subprocess.check_output('squeue --user hadpb',

shell=True).count('\n')

max_new_jobs=max_jobs_in_queue-queued_jobs

while max_new_jobs>0 and current_day<=end_day:

f=open("multirun.slm","w+")

f.write('#!/bin/ksh -l\n')

f.write('#SBATCH --output=%s/Galveston_sp_frame_%04d%02d%02d%02d.out\n' %

(opdir,

current_day.year,current_day.month,

current_day.day,current_day.hour))

f.write('#SBATCH --qos=normal\n')

f.write('#SBATCH --ntasks=4\n')

f.write('#SBATCH --ntasks-per-core=1\n')

f.write('#SBATCH --mem=40000\n')

f.write('#SBATCH --time=10\n')

count=0

for fraction in (0,.25,.5,.75):

if is_done(current_day.year,current_day.month,

current_day.day,current_day.hour+fraction):

continue

cmd=("./Galveston_V3vV2c.py --year=%d --month=%d" +

" --day=%d --hour=%f &\n") % (

current_day.year,current_day.month,

current_day.day,current_day.hour+fraction)

f.write(cmd)

count=count+1

f.write('wait\n')

f.close()

current_day=current_day+datetime.timedelta(hours=1)

if count>0:

max_new_jobs=max_new_jobs-1

rc=subprocess.call('sbatch multirun.slm',shell=True)

os.unlink('multirun.slm')

To turn the thousands of images into a movie, use ffmpeg

ffmpeg -r 24 -pattern_type glob -i Galveston_Hurricane_sp/\*.png \

-c:v libx264 -threads 16 -preset slow -tune animation \

-profile:v high -level 4.2 -pix_fmt yuv420p -crf 25 \

-c:a copy Galveston_Hurricane_sp.mp4